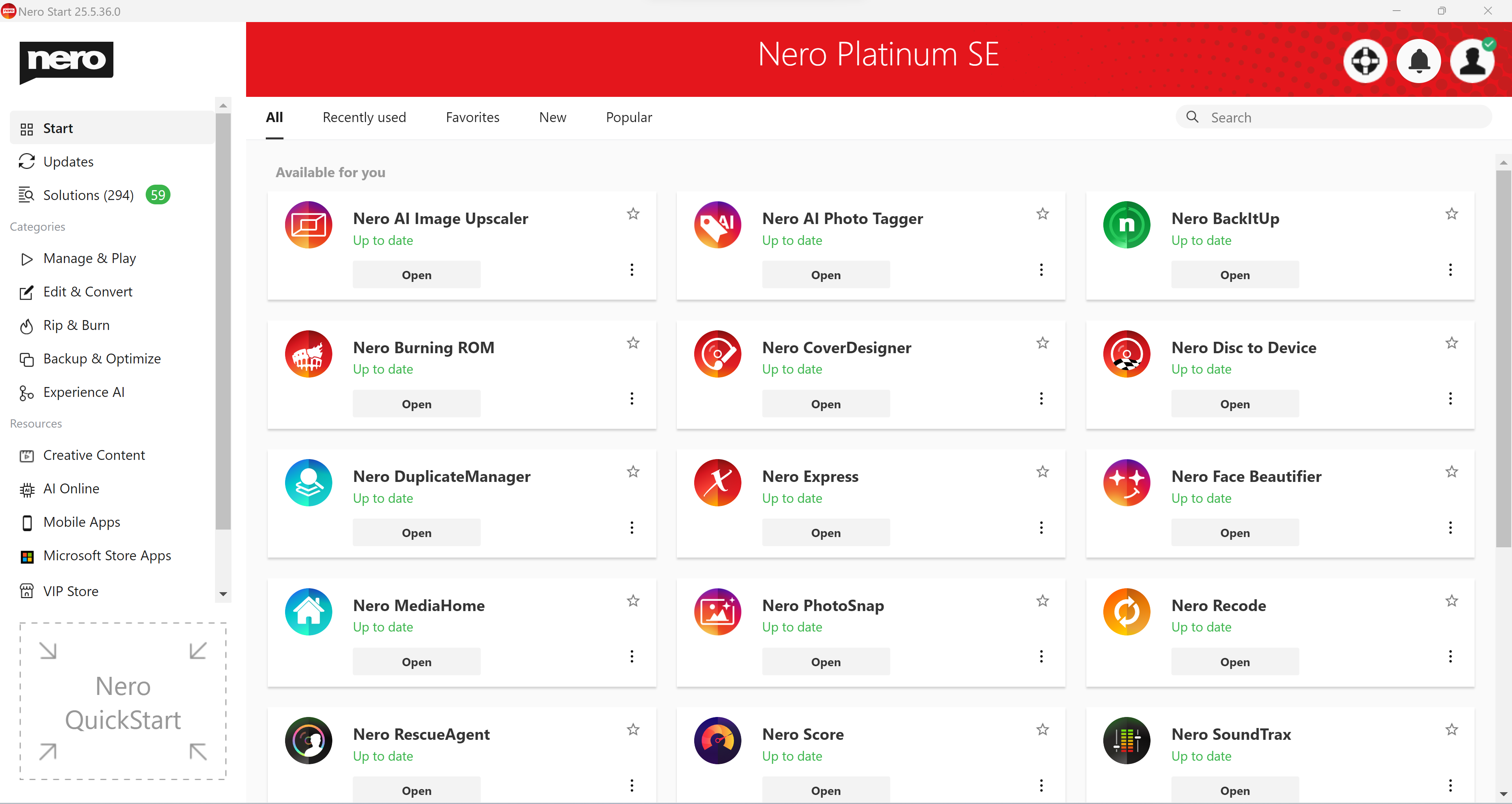

What can AI do for me today?

Generative AI

Updated on 4th of June 2024, focused on end-users not businesses, text created without using AI.

Gen AI describes algorithms (such as ChatGPT) that can be used to create new content, including audio, code, images, text, simulations, and videos.

Multimodal Language Models

Hottest topic right now! AI can see (image and video) and listen/talk, read/answer in real-time.

- Open AI ChatGPT 4o (o for omni-model, basis for Microsoft Copilot)

- Google Gemini 1.5 (to be deeply integrated in Android 15, Fall 2024)

- Microsoft Copilot (deeply integrated into Windows 11 on Copilot+PCs)

Example use cases:

- Image Captioning: Multimodal AI allows computers to generate descriptive captions for images. For example, a system can analyze a picture and generate a caption like, “A playful dog chasing a frisbee in the park.”

- Speech Recognition: Multimodal AI is used in speech recognition systems that can transcribe spoken language into text. This technology is widely used in voice assistants and transcription services.

- Video Understanding: It enables AI systems to understand the content of videos. This is valuable for applications like content moderation, video summarization, and more.

- Scam/Thread detection

- Live math tutor while you write on a piece of paper

- Personal assistant (Much more powerful than Apple Siri and Amazon Alexa

Some of the use cases have been possible before by combining a LLM with other AI models (e.g. voice recognition), but multimodal models do it all in one.

Large Language Models (LLM)

Allows you to chat with an AI.

- OpenAI ChatGPT (Web, iOS app, Mac app)

- Microsoft Copilot (Integrated in Windows 11, Edge Web Browser, Bing Search engine, GitHub, Office, mobile apps)

- Google Gemini (Integrated in

- Meta Llama

- Amazon Titan

- Anthropic Claude

- many more

Example use cases:

- Text Generation: produce various text formats, such as articles, poems, code, scripts, musical pieces, emails, and letters. It can even mimic specific writing styles, making it a valuable tool for writers and content creators.

- Code Generation: assists developers by writing code snippets, debugging existing code, or even creating simple applications.

- Data Analysis: It can analyze datasets, extract insights, and generate reports, making it useful for data analysts and researchers.

- Translation: Translate text between multiple languages, facilitating communication and research across linguistic barriers.

- Summarize: Provides key take-aways from documents, emails, chats, even whole inboxes.

Small language models (SLM)

Small language models are designed to perform well for simpler tasks, are more accessible and easier to use for organizations with limited resources and they can be more easily fine-tuned to meet specific needs.

The quality, for now, is less than with a LLM.

Audio AI models

- Text 2 Music: Create a song with lyrics from a text prompt e.g. Suno

- Voice 2 Text e.g. YouTube Automatic Subtitles, Resound

- Separate vocals and the individual instruments from a music track e.g. Apple Logic Pro Stem splitter

Image AI models

- Text 2 Image e.g. Midjourney, Dall-E, Bing Image Creator, Nero Muse

- Image 2 Text AI Image Annotation/Tagging/Face recognition e.g. iOS Photos app, Amazon Rekognition

- Image 2 Image AI Effects e.g. Adobe Sensei, Lens AI, Nero Lens

- Automatic object removal e.g. Google Photos app

- Image Restoration AI Scratch Removal, Black and white colorisation e.g. Nero AI

- Image Upscaling e.g. Nero AI Image Upscaler

- Background removal e.g. iOS “Lift subject from photo”, Nero Background Remover

Video AI models

- Text 2 Video e.g. Open AI Sora, Runway, Synthesis (Human presenter synthesis)

- Video 2 Text e.g. Open AI ChatGPT 4o

- Video 2 Video Effects e.g. CapCut Background Remover, DaVinci Resolve object tracker

- Video Upscaling e.g. Topaz, Nero AI Video Upscaler

3D AI models

- Text 2 model e.g. describe the model you want and let AI create it for you e.g. Luma AI

- Image 2 model e.g. take one or several images of an existing object or scene to get a 3D model e.g. free Apple Reality Composer App

- Text 2 3d scene e.g. ChatGPT plug-in for Blender

Platforms

Cloud: Most of the above use cases require very powerful hardware and software hosted in data centres to be timely executed and consume a lot of electrical energy during processing.

Phone/Tablet: Since 4 years Google phones (Tensor) and Apple iPhones (Neural Engine) have a dedicated AI section in the chips that allow certain processing to be done on-device e.g. Image Tagging, Voice Recognition, Photo optimisation while taking photos (e.g. low-light, moon replacement, upscaling)

Mac: Since 4 years Apple Mac M-Series (Neural Engine) have a dedicated AI section in the chips that allow certain processing to be done on-device e.g. Image Tagging, Voice Recognition

PC:

- NVDIDA RTX graphic cards are still dominating the market for AI running locally on a consumer PC, most AI models are optimised for NVIDIA CUDA and can run well on a state of the art gaming PC with the latest NVIDIA graphics card and a lot of RAM e.g. Nero AI Video Upscaler

- Intel and AMD are closing in by providing their own graphics cards that spec wise get closer to NVDIDA, however still most software does not fully utilise those

- Intel, AMD, Qualcomm will release chips with additional NPUs (dedicated Neural Processing Units) that will be fully supported by Windows 11 to run more AI tasks locally (e.g. live video conference transcription/translation) in 2024

- Microsoft introduced the Copilot+ PCs with Recall and Cocreate features running on the local NPU of devices branded as such

TVs: Real-time AI video upscaling, AI audio/video optimization on device.

Security cameras: Many security cameras are coming with on-device face, pet, license plate recognition to trigger certain scenarios (e.g. open the garage when my car approaches, don't trigger the alarm when a family member comes into view or our dog moves around the house. Some cameras offload the tasks to the cloud, but require therefore a monthly subscription to provide AI features.

Smart Watches: Including small AI chips to make sense of the data coming form the sensors (location, speed, movement, heart rate, blood oxygen etc.).

Other wearables: Humane AI Pin, Rabbit, Meta Glasses mostly relying on a cloud connection to process data with AI.

Drones: Route planning, obstacle detection, motor control supported by on-device AI.

Toys: Robot like toys to entertain kids or to train them the basics of electronics/coding/mechanics/AI.

Robot cleaner/mower: Scanning the environment to find a places clean/trim without running over any items, pets, wildlife.

Self-driving cars: Not covered here as it is separate, very complex topic with it's own set of specific issues (Cost, overpromised and underdelivered, emotions of other drivers, pedestrians, cyclists and many ethical issues).

More and more hardware will see the integration of AI features on device.

On-device AI

The company that provides the platform (hardware, firmware, operating system) is the one best positioned to provide an outstanding local AI experience. Ideally the hardware and the software are from the same company (e.g. Microsoft Surface, Apple iPhone, Google Pixel, LG TV, Tesla, DJI).

Third-parties need to rely on APIs provided by the platform companies. Most platform companies give their own apps more access than 3rd party providers. Reasonings are data privacy, device security, potential malicious apps).

AI Drawbacks

- The carbon footprint of AI is immense. For training as well as for actually using the AI. Producing the required hardware for the data centers and providing the electrical power has toll on the environment. The hardware replacement cycle is very fast, which adds to the e-waste problem of AI.

- Interacting with a current AI gives you a feeling of interacting with something intelligent, something with consciousness. However don't be fooled. It is basically all about probabilities. If you give this input, what is the most likely correct output? So nothing you get from an AI can be considered for granted and correct. Everything needs to be cross checked. Most providers of AI services have an extra layer on top of the AI that tries to ensure that the AI is not offensive, discriminating, making legal-binding offers, hallucinating, however with varying success. Some users make it their challenge to trick the AI into doing things it shouldn't do.

- AI models are biased. Any bias of the training data (e.g. male vs. female vs. divers, white people vs. people of color) will be directly reflected in the answers of the AI model.

- Context: there are technical limitations on how big the input can be e.g. summarising a very large document/book and keeping the context in a long chat or several sessions.